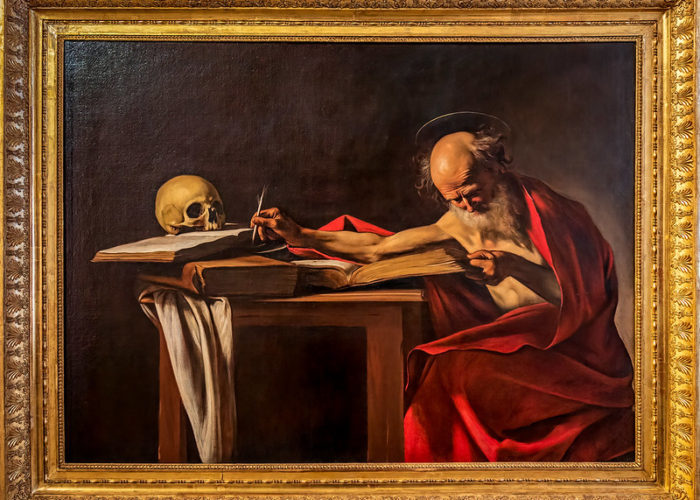

Happy International Translation Day! This celebration takes place on September 30 in honor of Saint Jerome, who translated the Bible into Latin, and who is the patron saint of translators. Little could Jerome have known, as he sat down to his arduous task, that 1,600 or so years later, machine translation would become a reality. So should the human translator worry about being replaced by a real-life C3PO (who tells Luke Skywalker that he is “fluent in over 6 million forms of communication”)?

Not yet. Machine translation can be useful for getting basic information across. But it still needs to be edited by a human translator to ensure accuracy (this is called “post-editing”).

Making Information Available

Machine translation (or MT) is appropriate for making basic information available in another language. On the English-language version of the French Culture Ministry’s website, the following disclaimer appears: “This page has been automatically translated from French into English by Reverso AI. Automatic translations are not as accurate as translations made by professional human translators, but they can help you understand information published regularly by the French Ministry of Culture and Communication.”

It’s an excellent idea for the Culture Ministry to let everyone know that these are machine translations and may contain some glitches. Before MT, these webpages were in all likelihood not translated at all, and having some information in English is better than nothing. As my colleague Maria Guzenko pointed out recently in a very informative post, MT “may be fine for casual, low-stakes communication that will not be publicized.”

Content That Matters

But what about communication that will be publicized? Consider situations where an organization wants to publish its research, attract new clients, or communicate key information. In these cases, a human translator is essential. (Here is a quick and easy guide to hiring a translator.) The ability to understand the message in the source language and craft an accurate and engaging translation in the target language cannot be replaced by a computer program.

Consider this: the same probability concepts that drive machine translation also produce the automatic suggestions in gmail replies. If you were replying to an important email, would you choose one of gmail’s three automatic replies and leave it at that? The selection of “OK, fine!” “Yes, I can do it!” or “No, sorry” doesn’t exactly cut it.

Should Translators Try Machine Translation?

At a New York Circle of Translators meeting, I participated in an experiment where an excerpt of my translation of a French report on the right of asylum was compared to five machine translations of the same text. I reported on the results for the Gotham Translator, the NYCT newsletter, and here’s a brief summary.

Using the BLEU metric (bilingual evaluation understudy), four of the five MT engines produced translations that would be considered very useable, with post-editing necessary. Although these are respectable scores, I don’t think I would choose to use machine translation for such projects, where my translation must be of publishable quality. First I would need to check the MT output for accuracy. Then, to meet standards for publishable writing, over half of the MT-produced excerpt would need stylistic editing. The role of the translator would be shifted from the creative act of translating to the rather tedious and time-consuming task of editing an unreliable translation.

Evaluating Machine Translation Results

Moreover, I’m not sure that the BLEU metric is all that valuable for evaluating machine translation. For some reason, Google Translate got the highest BLEU score, even though it produced this heading: “Why the right of asylum in Europe does not work?”

There were few out-and-out mistakes in this MT output, which was pretty impressive, though three of the five engines translated “shifting the burden” as “postponing the burden.” Here’s a comparison of the human and machine translations for a short sentence of the report:

Human translation:

This situation clearly shows that there is a true “asylum lottery” in Europe.

Machine translation:

- The situation is such that one can speak of a real “asylum lottery” in Europe!

- The situation is such that we can speak of a real “asylum lottery” in Europe!

- The situation is such that we can talk about a real “asylum Lottery” in Europe!

- The situation is such that we can talk about a real “asylum lottery” in Europe!

- The situation is such that we can speak of a real “asylum lottery” in Europe!

The literalism of MT makes for a clunky translation that needs stylistic editing in the case of publishable material.

Score: Saint Jerome, 1; robot, 0.

Top image: Saint Jerome Writing by Caravaggio in the Borghese Gallery. Photo Sergei Mutovkin via Flickr.